We present in this paper a novel approach for 3D/2D intraoperative registration during neurosurgery via cross-modal inverse neural rendering. Our approach separates implicit neural representation into two components, handling anatomical structure preoperatively and appearance intraoperatively. This disentanglement is achieved by controlling a Neural Radiance Field’s appearance with a multi-style hypernetwork. Once trained, the implicit neural representation serves as a differentiable rendering engine, which can be used to estimate the surgical camera pose by minimizing the dissimilarity between its rendered images and the target intraoperative image. We tested our method on retrospective patients’ data from clinical cases, showing that our method outperforms state-of-the-art while meeting current clinical standards for registration.

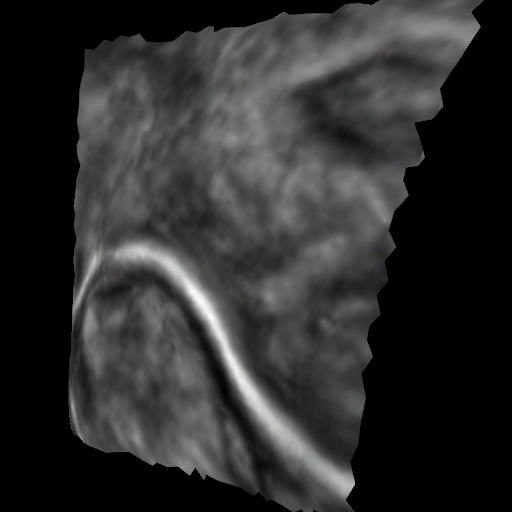

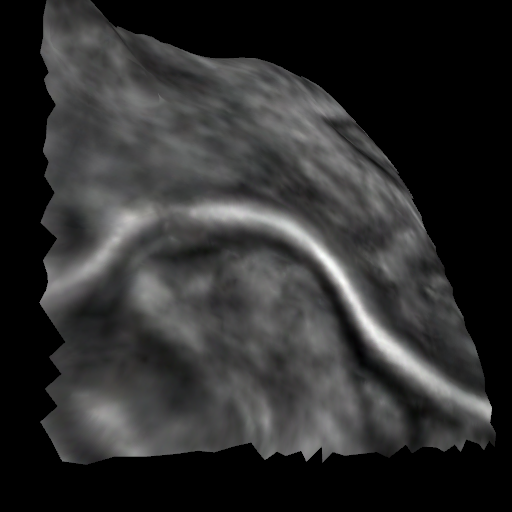

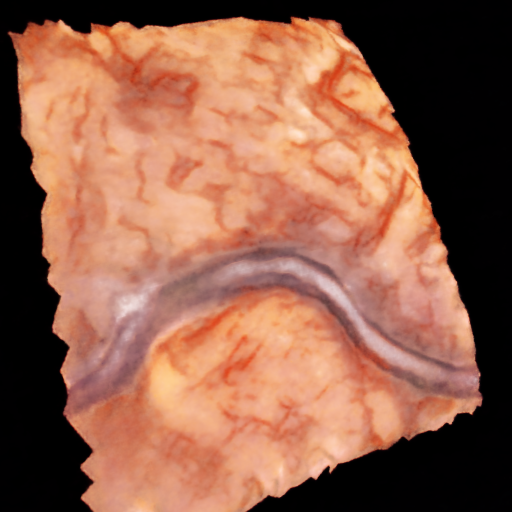

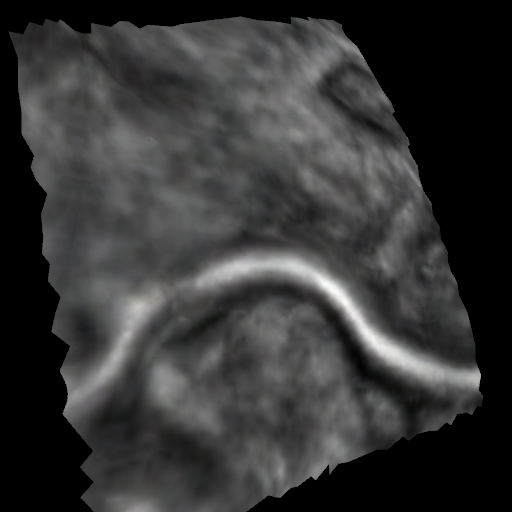

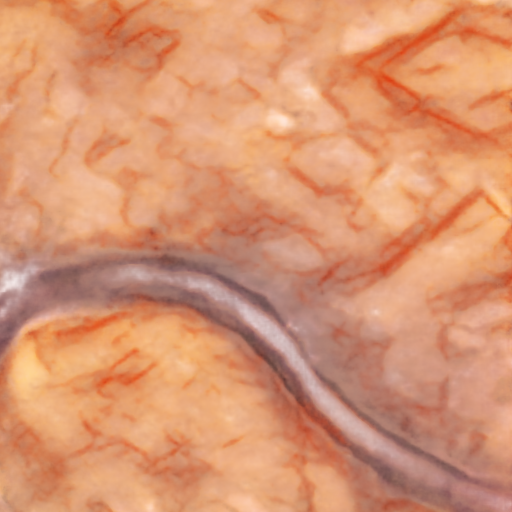

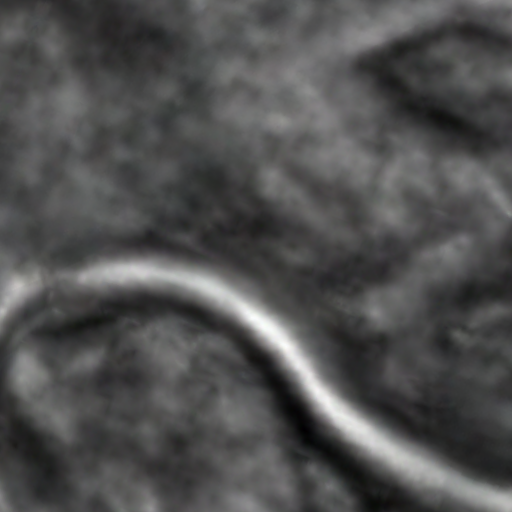

Our method renders novel views with varying appearances while keeping a fixed anatomical structure. Given that our NeRF is created from and registered to a preoperative scan, we can render novel views with intraoperative appearance and relate it back to the preoperative volume.

Using the NeRF, conditioned on the intraoperative image via the hypernet, we perform registration.