|

Maximilian Fehrentz I'm a first-year PhD candidate with Nassir Navab in the Computer Aided Medical Procedures (CAMP) group at TU Munich. Previously, I was a research intern at Harvard Medical School & Brigham and Women's Hospital with Nazim Haouchine and a student researcher at LMU University Hospital Munich with Christian Heiliger. In Boston, I worked on 2D/3D registration in neurosurgery using multi-modal radiance fields. In Munich, I focused on 4D reconstruction for visceral surgery with CT-coupled 4D Gaussian Splatting. I am also part of TUM.ai, OneAIM, and CDTM. |

|

ResearchI build intelligent systems for surgery, mostly focused on brain and visceral surgery. In particular, I work on intraoperative 4D reconstruction, 4D language-aligned representations, multi-modal representations that leverage 3D preoperative imaging (CT, MRI, …), and multi-modal reasoning based on knowledge about the procedure as embedded in (M)LLMs. To complement fine-grained 4D spatiotemporal understanding, I am also interested in long-form video understanding to reason over several hours of surgery. My work primarily involves 4D radiance fields, MLLMs, and all sorts of (vision) foundation models. As we work on the intersection of medical interventions and AI, I work closely with surgeons and spend time in the operating room. |

Selected Publications |

|

|

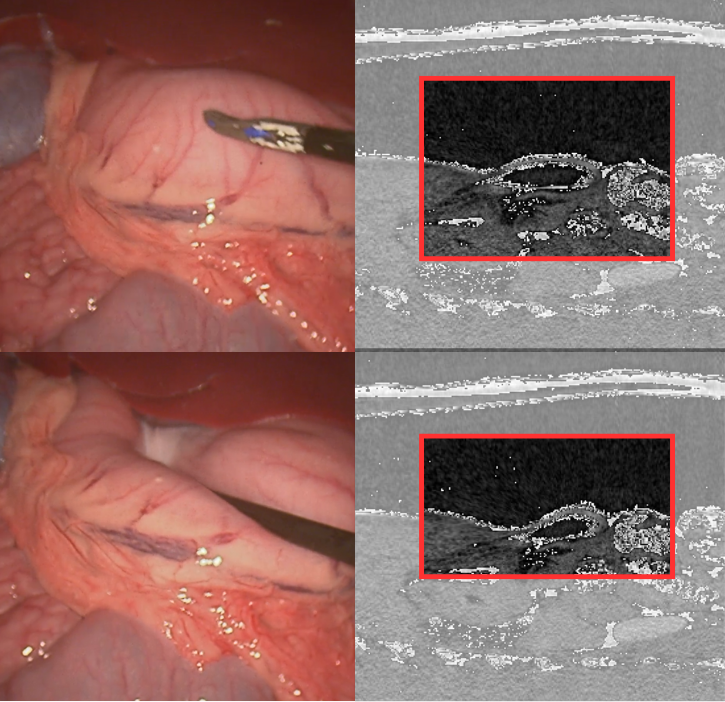

BridgeSplat: Bidirectionally Coupled CT and Non-rigid Gaussian Splatting for Deformable Intraoperative Surgical Navigation

Maximilian Fehrentz, Alexander Winkler, Thomas Heiliger, Nazim Haouchine, Christian Heiliger, Nassir Navab MICCAI, 2025 project page / arXiv Coupling 3D preoperative imaging (CT) and intraoperative 4D Gaussian Splatting. |

|

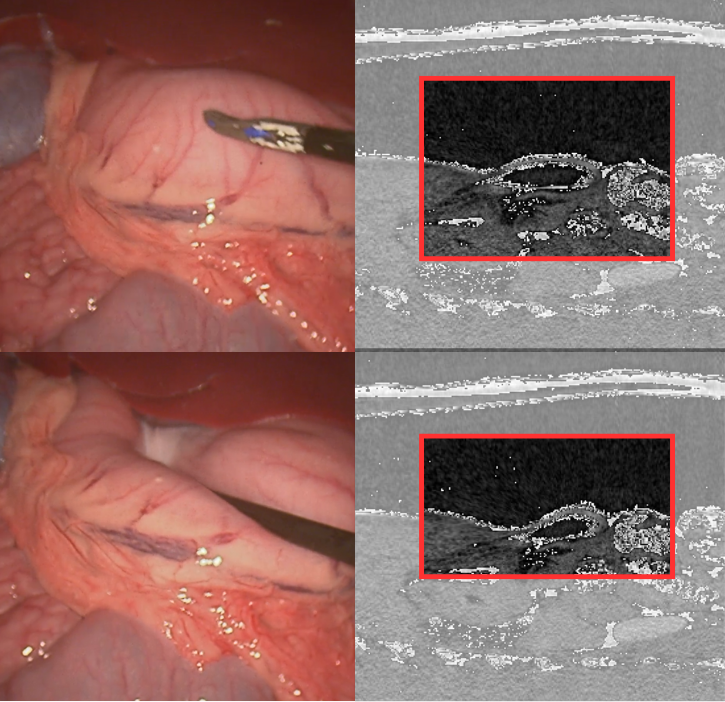

Intraoperative Registration by Cross-Modal Inverse Neural Rendering

Maximilian Fehrentz, Mohammad Farid Azampour, Reuben Dorent, Hassan Rasheed, Colin Galvin, Alexandra Golby, William M. Wells III, Sarah Frisken, Nassir Navab, Nazim Haouchine MICCAI, 2024 project page / arXiv Intraoperative registration of the preoperative MRI to the brain using a cross-modal NeRF. |

|

This website is adapted from Jon Barron's template. |